How to Accelerate Learning of Deep Neural Networks With Batch Normalization

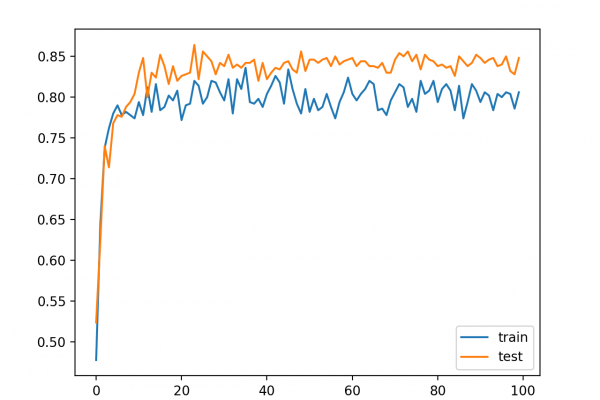

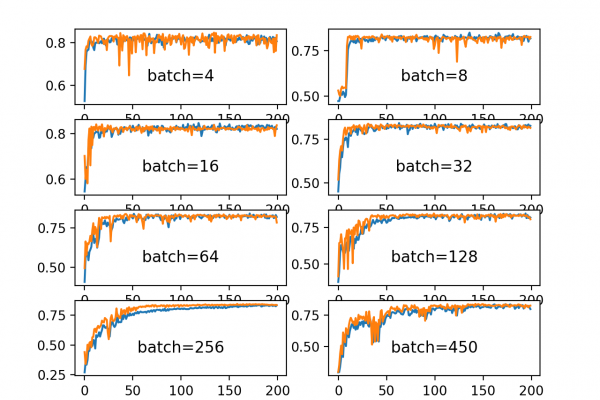

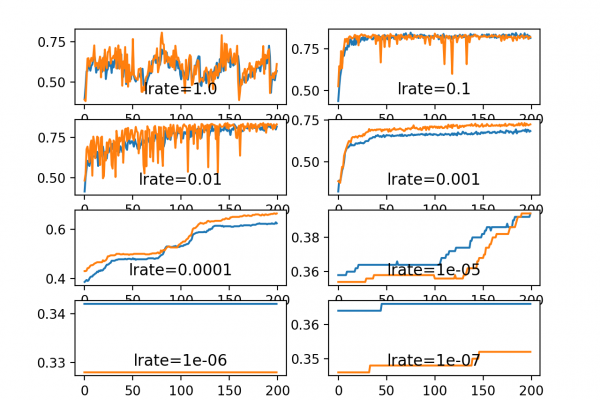

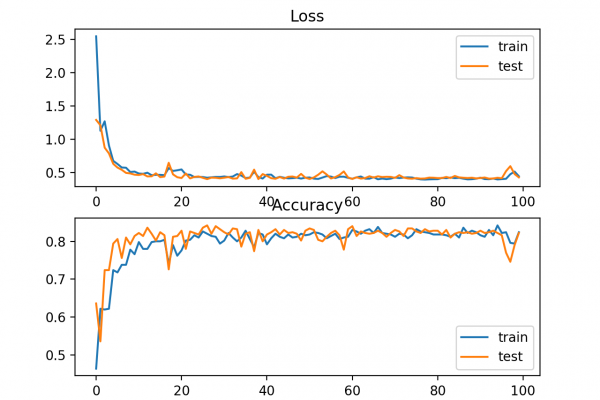

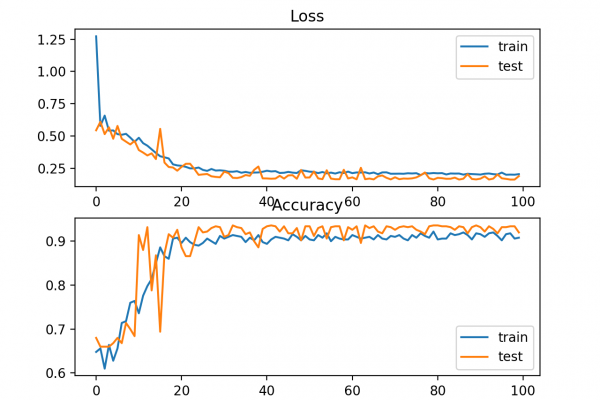

Last Updated on August 25, 2020 Batch normalization is a technique designed to automatically standardize the inputs to a layer in a deep learning neural network. Once implemented, batch normalization has the effect of dramatically accelerating the training process of a neural network, and in some cases improves the performance of the model via a modest regularization effect. In this tutorial, you will discover how to use batch normalization to accelerate the training of deep learning neural networks in Python […]

Read more