Framework for Better Deep Learning

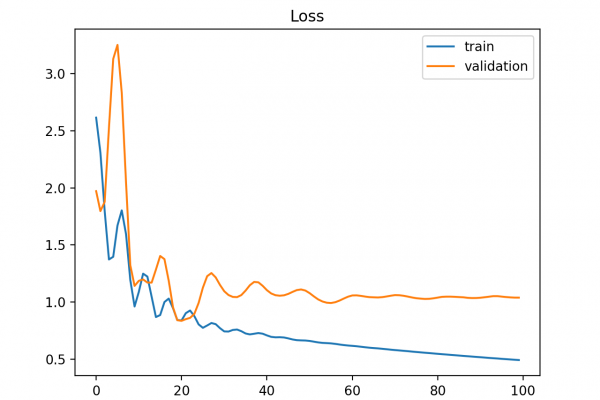

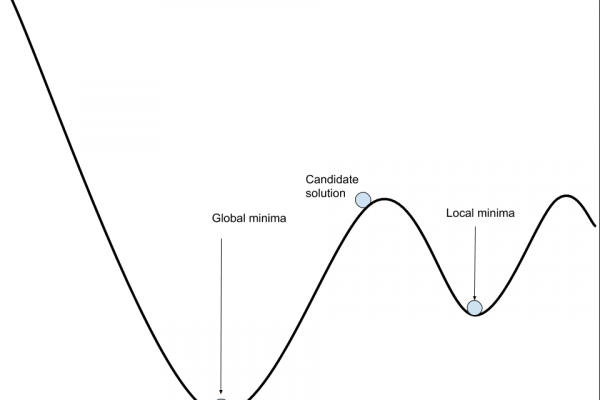

Last Updated on August 6, 2019 Modern deep learning libraries such as Keras allow you to define and start fitting a wide range of neural network models in minutes with just a few lines of code. Nevertheless, it is still challenging to configure a neural network to get good performance on a new predictive modeling problem. The challenge of getting good performance can be broken down into three main areas: problems with learning, problems with generalization, and problems with predictions. […]

Read more