A Gentle Introduction to Ensemble Learning Algorithms

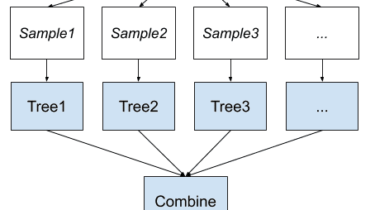

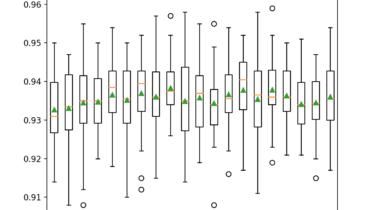

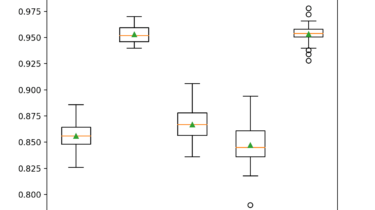

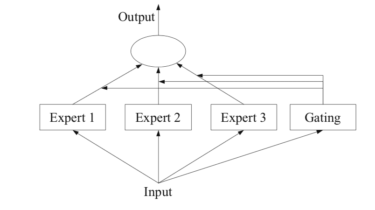

Ensemble learning is a general meta approach to machine learning that seeks better predictive performance by combining the predictions from multiple models. Although there are a seemingly unlimited number of ensembles that you can develop for your predictive modeling problem, there are three methods that dominate the field of ensemble learning. So much so, that rather than algorithms per se, each is a field of study that has spawned many more specialized methods. The three main classes of ensemble learning […]

Read more