A Gentle Introduction to Multiple-Model Machine Learning

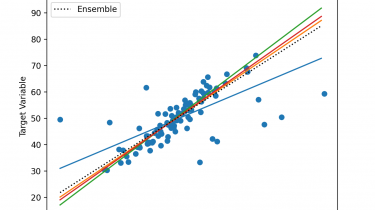

An ensemble learning method involves combining the predictions from multiple contributing models. Nevertheless, not all techniques that make use of multiple machine learning models are ensemble learning algorithms. It is common to divide a prediction problem into subproblems. For example, some problems naturally subdivide into independent but related subproblems and a machine learning model can be prepared for each. It is less clear whether these represent examples of ensemble learning, although we might distinguish these methods from ensembles given the […]

Read more