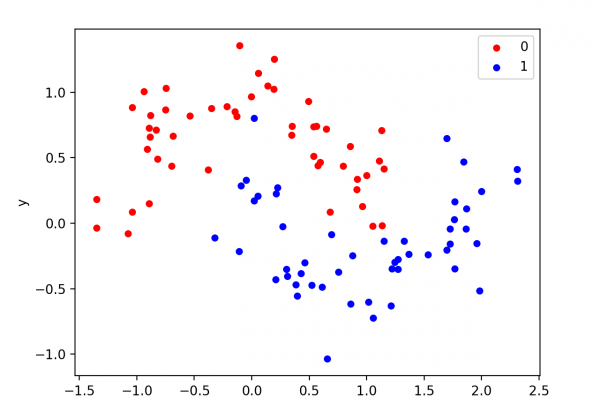

Visualizing the vanishing gradient problem

Deep learning was a recent invention. Partially, it is due to improved computation power that allows us to use more layers of perceptrons in a neural network. But at the same time, we can train a deep network only after we know how to work around the vanishing gradient problem. In this tutorial, we visually examine why vanishing gradient problem exists. After completing this tutorial, you will know What is a vanishing gradient Which configuration of neural network will be […]

Read more