Why Training a Neural Network Is Hard

Last Updated on August 6, 2019

Or, Why Stochastic Gradient Descent Is Used to Train Neural Networks.

Fitting a neural network involves using a training dataset to update the model weights to create a good mapping of inputs to outputs.

This training process is solved using an optimization algorithm that searches through a space of possible values for the neural network model weights for a set of weights that results in good performance on the training dataset.

In this post, you will discover the challenge of training a neural network framed as an optimization problem.

After reading this post, you will know:

- Training a neural network involves using an optimization algorithm to find a set of weights to best map inputs to outputs.

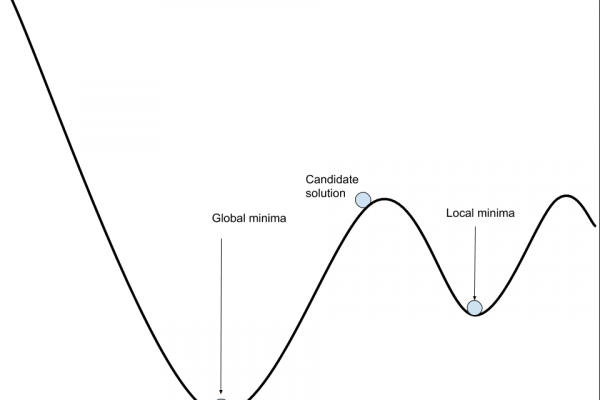

- The problem is hard, not least because the error surface is non-convex and contains local minima, flat spots, and is highly multidimensional.

- The stochastic gradient descent algorithm is the best general algorithm to address this challenging problem.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

To finish reading, please visit source site

To finish reading, please visit source site