How to Control the Stability of Training Neural Networks With the Batch Size

Last Updated on August 28, 2020

Neural networks are trained using gradient descent where the estimate of the error used to update the weights is calculated based on a subset of the training dataset.

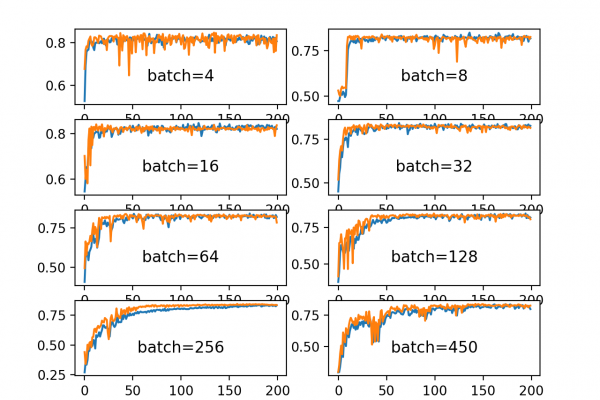

The number of examples from the training dataset used in the estimate of the error gradient is called the batch size and is an important hyperparameter that influences the dynamics of the learning algorithm.

It is important to explore the dynamics of your model to ensure that you’re getting the most out of it.

In this tutorial, you will discover three different flavors of gradient descent and how to explore and diagnose the effect of batch size on the learning process.

After completing this tutorial, you will know:

- Batch size controls the accuracy of the estimate of the error gradient when training neural networks.

- Batch, Stochastic, and Minibatch gradient descent are the three main flavors of the learning algorithm.

- There is a tension between batch size and the speed and stability of the learning process.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Updated Oct/2019:

To finish reading, please visit source site