How to use Data Scaling Improve Deep Learning Model Stability and Performance

Last Updated on August 25, 2020

Deep learning neural networks learn how to map inputs to outputs from examples in a training dataset.

The weights of the model are initialized to small random values and updated via an optimization algorithm in response to estimates of error on the training dataset.

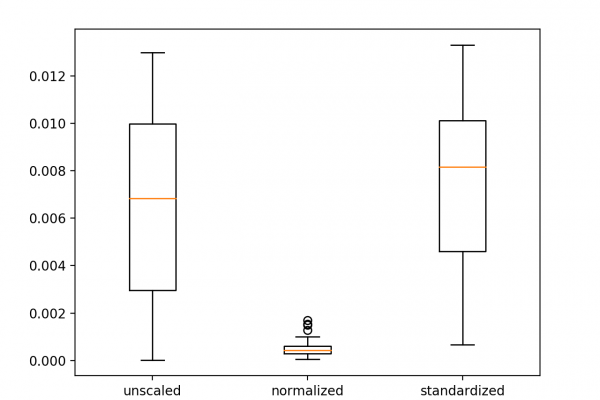

Given the use of small weights in the model and the use of error between predictions and expected values, the scale of inputs and outputs used to train the model are an important factor. Unscaled input variables can result in a slow or unstable learning process, whereas unscaled target variables on regression problems can result in exploding gradients causing the learning process to fail.

Data preparation involves using techniques such as the normalization and standardization to rescale input and output variables prior to training a neural network model.

In this tutorial, you will discover how to improve neural network stability and modeling performance by scaling data.

After completing this tutorial, you will know:

- Data scaling is a recommended pre-processing step when working with deep learning neural networks.

- Data scaling can be achieved by normalizing or standardizing real-valued input and output variables.

- How to apply standardization and normalization to

To finish reading, please visit source site