Mini-Course on Long Short-Term Memory Recurrent Neural Networks with Keras

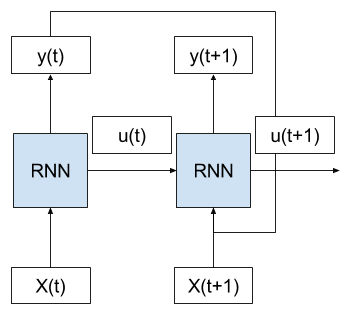

Last Updated on August 14, 2019 Long Short-Term Memory (LSTM) recurrent neural networks are one of the most interesting types of deep learning at the moment. They have been used to demonstrate world-class results in complex problem domains such as language translation, automatic image captioning, and text generation. LSTMs are different to multilayer Perceptrons and convolutional neural networks in that they are designed specifically for sequence prediction problems. In this mini-course, you will discover how you can quickly bring LSTM […]

Read more