A Gentle Introduction to RNN Unrolling

Last Updated on August 14, 2019

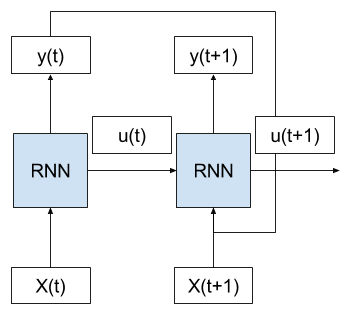

Recurrent neural networks are a type of neural network where the outputs from previous time steps are fed as input to the current time step.

This creates a network graph or circuit diagram with cycles, which can make it difficult to understand how information moves through the network.

In this post, you will discover the concept of unrolling or unfolding recurrent neural networks.

After reading this post, you will know:

- The standard conception of recurrent neural networks with cyclic connections.

- The concept of unrolling of the forward pass when the network is copied for each input time step.

- The concept of unrolling of the backward pass for updating network weights during training.

Kick-start your project with my new book Long Short-Term Memory Networks With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Unrolling Recurrent Neural Networks

Recurrent neural networks are a type of neural network where outputs from previous time steps are taken as inputs for the current time step.

We can demonstrate this with a picture.

Below we can see that the network takes both the output of the network from the

To finish reading, please visit source site