A small Python app to create Notion pages from Jira issues

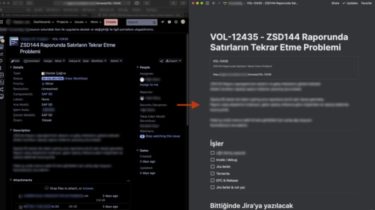

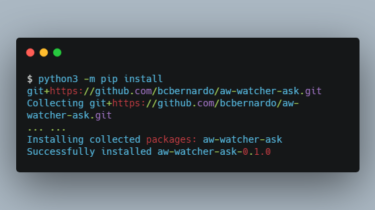

Jira to Notion This little program will capture a Jira issue and create a corresponding Notion subpage. Mac users can fetch the current issue from the foremost Chrome or Safari window. Others will get a popup asking for the issue number. Installation OS independent Install Python First. The program won’t work unless you install Python. Click here to install Python. The official Python docs are good enough to help you through the installation. Create a folder (presumably called j2n), and […]

Read more