Beginner’s Guide To Text Classification Using PyCaret

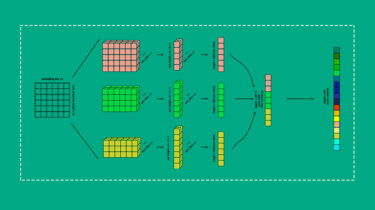

Introduction Have you ever solved a Machine Learning problem in just one go? Solving a problem using machine learning isn’t straightforward. It involves various steps to come up with an accurate solution. The process/steps to be followed for solving an ml problem is known as ML Pipeline/ML Cycle. ML Pipeline/ ML Cycle (Credits: https://medium.com/analytics-vidhya/machine-learning-development-life-cycle-dfe88c44222e) As shown in the figure, the Machine Learning pipeline consists of different steps like: Understand Problem Statement, Hypothesis Generation, Exploratory Data Analysis, Data Preprocessing, Feature Engineering, […]

Read more