Neural Semi supervised Learning for Text Classification Under Large Scale Pretraining

Neural-Semi-Supervised-Learning-for-Text-Classification

Neural Semi supervised Learning for Text Classification Under Large Scale Pretraining.

Download Models and Dataset

Datasets and Models are found in the follwing list.

- Download 3.4M IMDB movie reviews. Save the data at

[REVIEWS_PATH].

You can download the dataset HERE. - Download the vanilla RoBERTa-large model released by HuggingFace. Save the model at

[VANILLA_ROBERTA_LARGE_PATH].

You can download the model HERE. - Download in-domain pretrained models in the paper and save the model at

[PRETRAIN_MODELS]. We provide three following models.

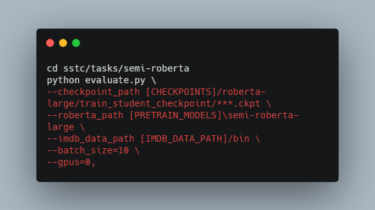

You can download HERE.init-roberta-base: RoBERTa-base model(U) trained over 3.4M movie reviews from scratch.semi-roberta-base: RoBERTa-base model(Large U + U) trained over 3.4M movie reviews from the open-domain pretrained model RoBERTa-base model.semi-roberta-large: RoBERTa-large model(Large U + U) trained over 3.4M movie reviews from the open-domain pretrained model