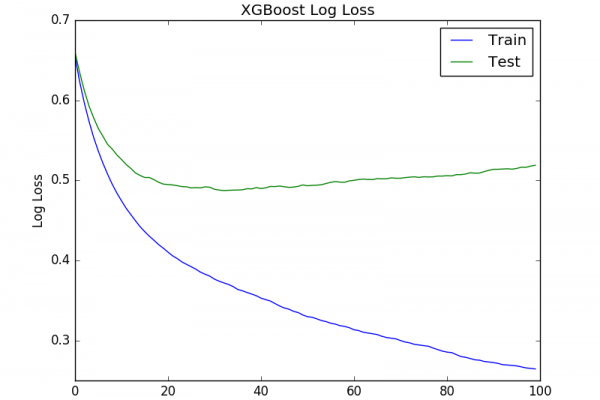

Avoid Overfitting By Early Stopping With XGBoost In Python

Last Updated on August 27, 2020 Overfitting is a problem with sophisticated non-linear learning algorithms like gradient boosting. In this post you will discover how you can use early stopping to limit overfitting with XGBoost in Python. After reading this post, you will know: About early stopping as an approach to reducing overfitting of training data. How to monitor the performance of an XGBoost model during training and plot the learning curve. How to use early stopping to prematurely stop […]

Read more