Avoid Overfitting By Early Stopping With XGBoost In Python

Last Updated on August 27, 2020

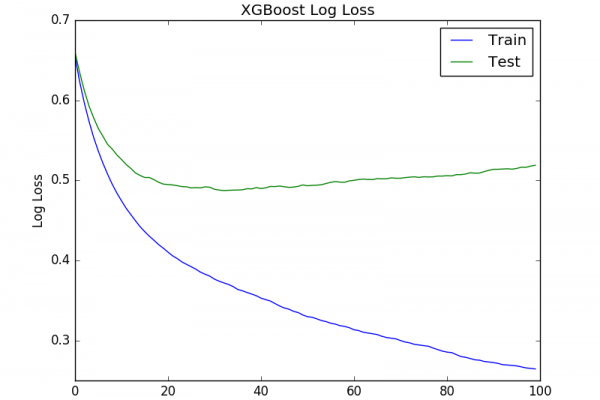

Overfitting is a problem with sophisticated non-linear learning algorithms like gradient boosting.

In this post you will discover how you can use early stopping to limit overfitting with XGBoost in Python.

After reading this post, you will know:

- About early stopping as an approach to reducing overfitting of training data.

- How to monitor the performance of an XGBoost model during training and plot the learning curve.

- How to use early stopping to prematurely stop the training of an XGBoost model at an optimal epoch.

Kick-start your project with my new book XGBoost With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Jan/2017: Updated to reflect changes in scikit-learn API version 0.18.1.

- Update Mar/2018: Added alternate link to download the dataset as the original appears to have been taken down.

Avoid Overfitting By Early Stopping With XGBoost In Python

Photo by Michael Hamann, some rights reserved.