Word2Vec For Word Embeddings -A Beginner’s Guide

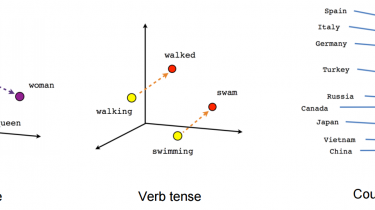

This article was published as a part of the Data Science Blogathon Why are word embeddings needed? Let us consider the two sentences – “You can scale your business.” and “You can grow your business.”. These two sentences have the same meaning. If we consider a vocabulary considering these two sentences, it will constitute of these words: {You, can, scale, grow, your, business}. A one-hot encoding of these words would create a vector of length 6. The encodings for each of […]

Read more