An Essential Guide to Pretrained Word Embeddings for NLP Practitioners

Overview

- Understand the importance of pretrained word embeddings

- Learn about the two popular types of pretrained word embeddings – Word2Vec and GloVe

- Compare the performance of pretrained word embeddings and learning embeddings from scratch

Introduction

How do we make machines understand text data? We know that machines are supremely adept at dealing and working with numerical data but they become sputtering instruments if we feed raw text data to them.

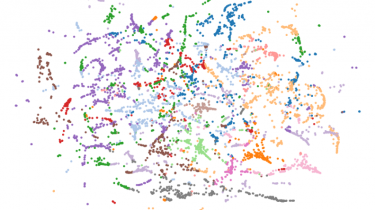

The idea is to create a representation of words that capture their meanings, semantic relationships and the different types of contexts they are used in. That’s what word embeddings are – the numerical representation of a text.

And pretrained word embeddings are a key cog in today’s Natural Language Processing (NLP) space.

But, the question still remains – do pretrained word embeddings give an extra edge to our NLP model? That’s an important point you