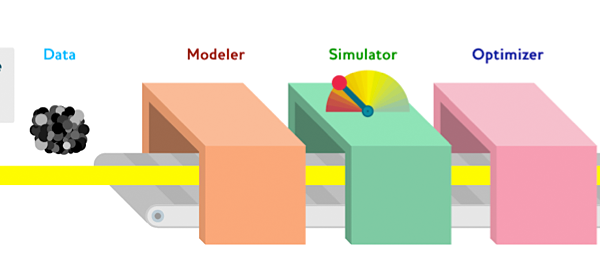

Clever Application Of A Predictive Model

Last Updated on August 15, 2020 What if you could use a predictive model to find new combinations of attributes that do not exist in the data but could be valuable. In Chapter 10 of Applied Predictive Modeling, Kuhn and Johnson provide a case study that does just this. It’s a fascinating and creative example of how to use a predictive model. In this post we will discover this less obvious use of a predictive model and the types of […]

Read more