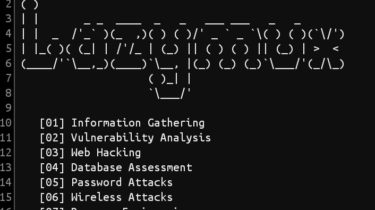

A tool installer that is specially made for termux user

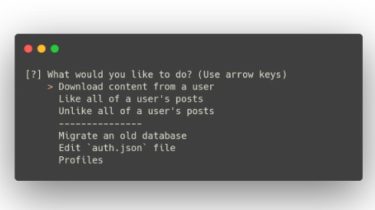

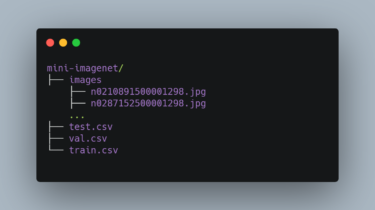

Lazymux Lazymux is a tool installer that is specially made for termux user which provides a lot of tool mainly used tools in termux and its easy to use, Lazymux install any of the given tools provided by it from itself with just one click, and its often get updated. Feature Tool InstallationInstall Single Toollzmx > set_install 1Install Multi Toollzmx > set_install 1 2 3 4Install All Toollzmx > set_install @ Default Dir InstallOn lazymux.conf replace symbol ~ with directory […]

Read more