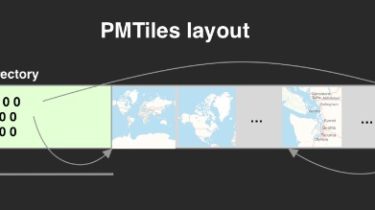

Cloud-optimized, single-file archive format for pyramids of map tiles

PMTiles is a single-file archive format for tiled data. A PMTiles archive can be hosted on a commodity storage platform such as S3, and enables low-cost, zero-maintenance map applications that are “serverless” – free of a custom tile backend or third party provider. Protomaps Blog: Dynamic Maps, Static Storage Leaflet + Raster Tiles Demo – watch your network request log MapLibre GL + Vector Tiles Demo – requires MapLibre GL JS v1.14.1-rc.2 or later See also: How To Use Go […]

Read more