Revamping Cross-Modal Recipe Retrieval with Hierarchical Transformers

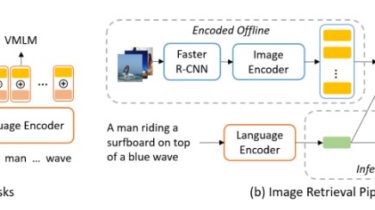

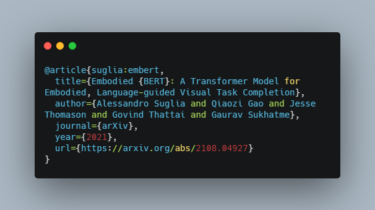

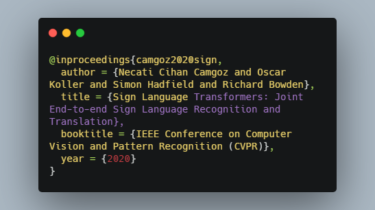

image-to-recipe-transformers Code for CVPR 2021 paper: Revamping Cross-Modal Recipe Retrieval with Hierarchical Transformers and Self-supervised Learning This is the PyTorch companion code for the paper: Amaia Salvador, Erhan Gundogdu, Loris Bazzani, and Michael Donoser. Revamping Cross-Modal Recipe Retrieval with Hierarchical Transformers and Self-supervised Learning. CVPR 2021 If you find this code useful in your research, please consider citing using the following BibTeX entry: @inproceedings{salvador2021revamping, title={Revamping Cross-Modal Recipe Retrieval with Hierarchical Transformers and Self-supervised Learning}, author={Salvador, Amaia and Gundogdu, Erhan and […]

Read more