The “tl;dr” on a few notable transformer papers

# tldr-transformers

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

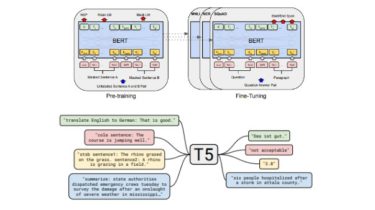

Models: GPT- *, * BERT *, Adapter- *, * T5, etc.

Each set of notes includes links to the paper, the original code implementation (if available) and the Huggingface :hugs: implementation.

Here is an example: t5.

The transformers papers are presented somewhat chronologically below. Go to the “:point_right: Notes :point_left:” column below to find the notes for each paper.

This repo also includes a table quantifying the differences across transformer papers all in one table.

Quick_Note

This is not an intro to deep learning in NLP. If you are looking for that, I recommend one of the following: Fast AI’s course, one of the