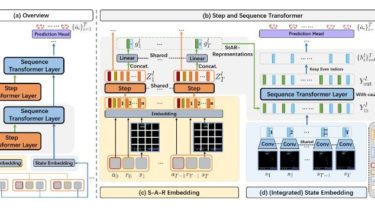

AniFormer: Data-driven 3D Animation with Transformer

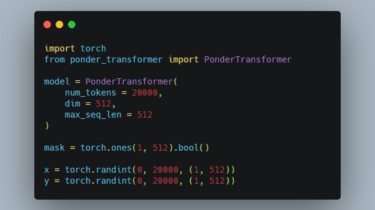

This is the PyTorch implementation of our BMVC 2021 paper AniFormer: Data-driven 3D Animation with Transformer.Haoyu Chen, Hao Tang, Nicu Sebe, Guoying Zhao. Citation If you use our code or paper, please consider citing: @inproceedings{chen2021AniFormer, title={AniFormer: Data-driven 3D Animation withTransformer}, author={Chen, Haoyu and Tang, Hao and Sebe, Nicu and Zhao, Guoying}, booktitle={BMVC}, year={2021} } Dependencies Requirements: python3.6 numpy pytorch==1.1.0 and above trimesh Dataset preparation Please download DFAUST dataset

Read more