Text Summarization using Transformer on GPU Docker Deployment

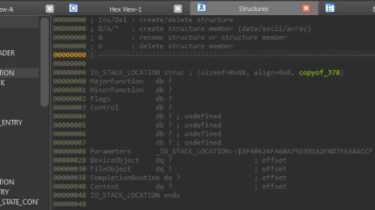

Deploying a Text Summarization NLP use case on Docker Container Utilizing Nvidia GPU, to setup the enviroment on linux machine follow up the below process, make sure you should have a good configuration system, my system specs are listed below(I am utilizing DataCrunch Servers) : GPU : 2xV100.10V Image : Ubuntu 20.04 + CUDA 11.1 Some Insights/Explorations If you’re a proper linux user make sure to setup it CUDA, cudaNN and Cuda Toolkit If you’re a WSL2 user then you […]

Read more