Tmux session manager built on libtmux

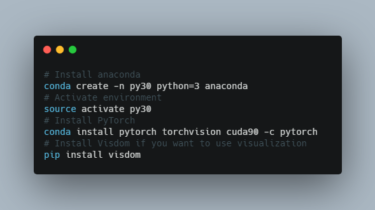

tmuxp, tmux session manager. built on libtmux. We need help! tmuxp is a trusted session manager for tmux. If you could lend your time to helping answer issues and QA pull requests, please do! See issue #290! New to tmux? The Tao of tmux is available on Leanpub and Amazon Kindle. Read and browse the book for free on the web. Installation $ pip install –user tmuxp Load a tmux session Load tmux sessions

Read more