How to Implement Bayesian Optimization from Scratch in Python

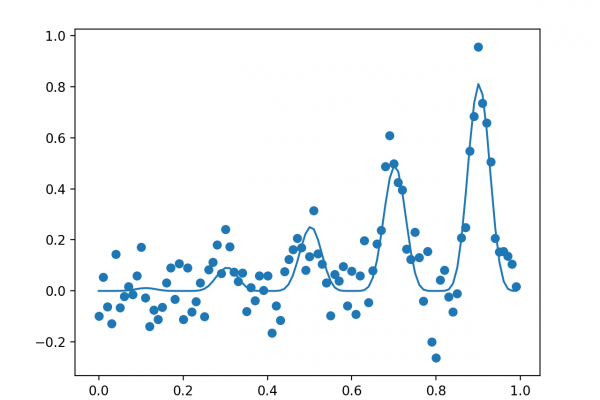

Last Updated on August 22, 2020 In this tutorial, you will discover how to implement the Bayesian Optimization algorithm for complex optimization problems. Global optimization is a challenging problem of finding an input that results in the minimum or maximum cost of a given objective function. Typically, the form of the objective function is complex and intractable to analyze and is often non-convex, nonlinear, high dimension, noisy, and computationally expensive to evaluate. Bayesian Optimization provides a principled technique based on […]

Read more