Use Early Stopping to Halt the Training of Neural Networks At the Right Time

Last Updated on August 25, 2020

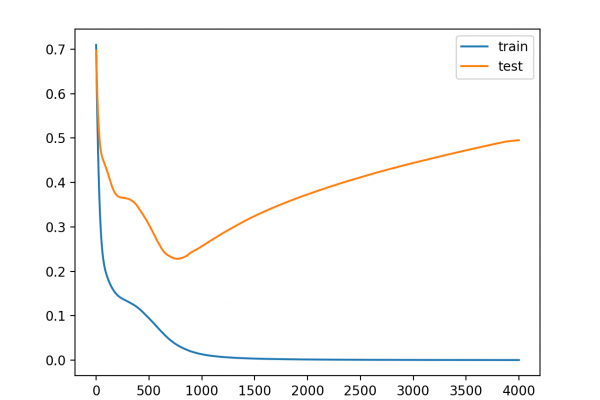

A problem with training neural networks is in the choice of the number of training epochs to use.

Too many epochs can lead to overfitting of the training dataset, whereas too few may result in an underfit model. Early stopping is a method that allows you to specify an arbitrary large number of training epochs and stop training once the model performance stops improving on a hold out validation dataset.

In this tutorial, you will discover the Keras API for adding early stopping to overfit deep learning neural network models.

After completing this tutorial, you will know:

- How to monitor the performance of a model during training using the Keras API.

- How to create and configure early stopping and model checkpoint callbacks using the Keras API.

- How to reduce overfitting by adding an early stopping to an existing model.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Updated Oct/2019: Updated for Keras 2.3 and TensorFlow 2.0.

To finish reading, please visit source site

To finish reading, please visit source site