Self-Supervised Learning with Vision Transformers

Self-Supervised Learning with Vision Transformers

By Zhenda Xie*, Yutong Lin*, Zhuliang Yao, Zheng Zhang, Qi Dai, Yue Cao and Han Hu

This repo is the official implementation of “Self-Supervised Learning with Swin Transformers”.

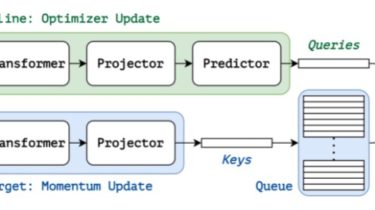

A important feature of this codebase is to include Swin Transformer as one of the backbones, such that we can evaluate the transferring performance of the learnt representations on down-stream tasks of object detection and semantic segmentation. This evaluation is usually not included in previous works due to the use of ViT/DeiT, which has not been well tamed for down-stream tasks.

It currently includes code and models for the following tasks:

Self-Supervised Learning and Linear Evaluation: Included in this repo. See get_started.md for a quick start.

Transferring Performance on