Pytorch implementation of Google AI’s 2018 BERT with simple annotation

BERT-pytorch

Pytorch implementation of Google AI’s 2018 BERT, with simple annotation

BERT 2018 BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding Paper URL : https://arxiv.org/abs/1810.04805

Google AI’s BERT paper shows the amazing result on various NLP task (new 17 NLP tasks SOTA),

including outperform the human F1 score on SQuAD v1.1 QA task.

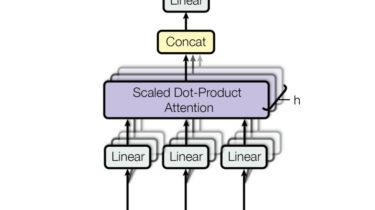

This paper proved that Transformer(self-attention) based encoder can be powerfully used as

alternative of previous language model with proper language model training method.

And more importantly, they showed us that this pre-trained language model can be transfer

into any NLP task without making task specific model architecture.

This amazing result would be record in NLP history,

and I expect many further papers about BERT will be published very soon.

This repo is implementation of BERT. Code is very simple and