Estimate the Number of Experiment Repeats for Stochastic Machine Learning Algorithms

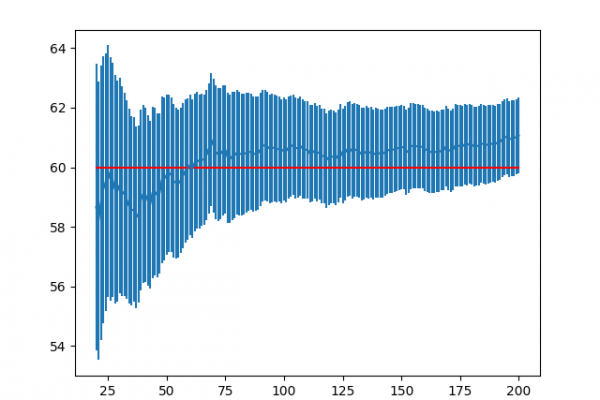

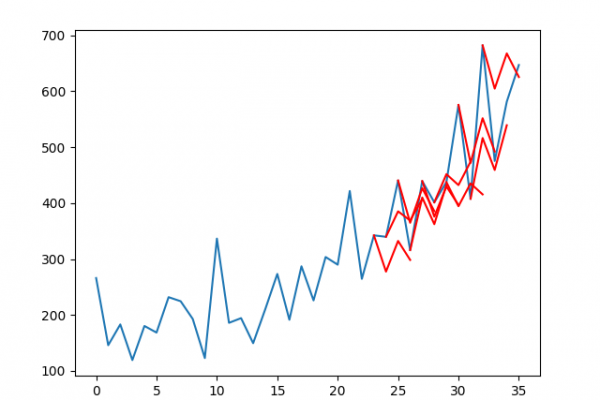

Last Updated on August 14, 2020 A problem with many stochastic machine learning algorithms is that different runs of the same algorithm on the same data return different results. This means that when performing experiments to configure a stochastic algorithm or compare algorithms, you must collect multiple results and use the average performance to summarize the skill of the model. This raises the question as to how many repeats of an experiment are enough to sufficiently characterize the skill of […]

Read more