A Naturally-Occurring Dataset Based on Stack Exchange Data

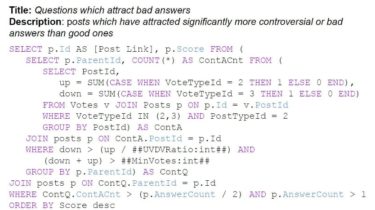

SEDE SEDE (Stack Exchange Data Explorer) is new dataset for Text-to-SQL tasks with more than 12,000 SQL queries and their natural language description. It’s based on a real usage of users from the Stack Exchange Data Explorer platform, which brings complexities and challenges never seen before in any other semantic parsing dataset like including complex nesting, dates manipulation, numeric and text manipulation, parameters, and most importantly: under-specification and hidden-assumptions. Paper (NLP4Prog workshop at ACL2021): Text-to-SQL in the Wild: A Naturally-Occurring […]

Read more