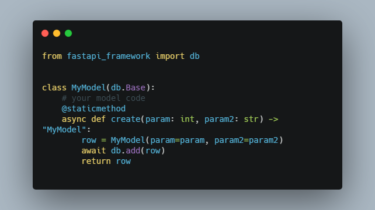

Extract the information contained in the QR code from the proof of vaccination generated

Extrait les informations contenues dans le code QR de la preuve de vaccination générée par le gouvernement du Québec Éxécuter le script Python3 extraire.py avec en paramètre le chemin vers le fichier PDF généré par le Gouvernement du Québec GitHub https://github.com/glabmoris/DecodeurPreuveVaccinationQC

Read more