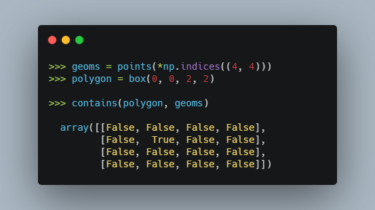

A C/Python library with vectorized geometry functions

PyGEOS PyGEOS is a C/Python library with vectorized geometry functions. The geometry operations are done in the open-source geometry library GEOS. PyGEOS wraps these operations in NumPy ufuncs providing a performance improvement when operating on arrays of geometries. Note: PyGEOS is a very young package. While the available functionality should be stable and working correctly, it’s still possible that APIs change in upcoming releases. But we would love for you to try it out, give feedback or contribute! What is […]

Read more