Machine Translation Weekly 79: More context in MT

The lack of broader context is one of the main problems in machine translation

and in NLP in general. People tried various methods with actually quite mixed

results. A recent preprint from Unbabel introduces an unusual quantification of

context-awareness and based on that do some training improvements. The title of

the paper is Measuring and Increasing Context Usage in Context-Aware Machine

Translation and will be presented at ACL

2021.

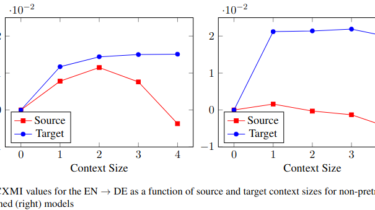

The paper measures how well informed the model is about the context by

computing a quantity that they call conditional cross-mutual information. It

sounds complicated, but it is “just” the difference of entropy that the model

attributes the reference sentence with and without the context. In other words,

how much more probable the correct translation becomes if more context is