Machine Translation Weekly 74: Architectrues we will hear about in MT

This week, I would like to feature three recent papers with innovations in

neural architectures that I think might become important in MT and multilingual

NLP during the next year. But of course, I might be wrong, in MT Weekly

27, I self-assuredly claimed that the

Reformer architecture will start an era of

much larger models than we have now and will turn the attention of the

community towards document-level problems and it seems it is not happening.

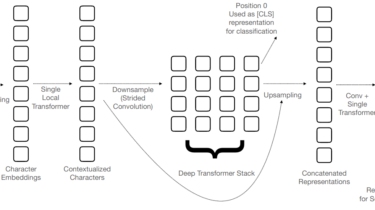

CANINE: Tokenization-free encoder

Tokenization is one of the major hassles in NLP. Splitting text into words (or

other meaningful units) sounds simple, but it gets quite complicated when you

go into details. These days, the German parliament discusses the

“Infektionschutzgesetz”, which means the infection protection law. It is

formally