Machine Translation Weekly 71: Explaining Random Feature Attention

Transformers are the neural architecture that underlies most of the current

state-of-the-art machine translation and natural language processing in

general. One of its major drawbacks is the quadratic complexity of the

underlying self-attention mechanism, which in practice limits the sequence

length that could be processed by Transformers. There already exist some tricks

to deal with that. One of them is local sensitive hashing that was used in the

Reformer architecture (see MT Weekly

27). The main idea was computing the

self-attention only for hidden states that fall into the same hash function

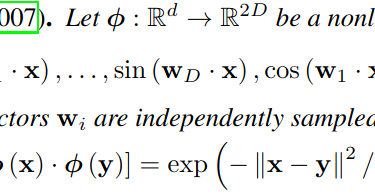

bucket. Random feature attention, a paper

by DeepMind and the University of Washington, that will be presented in this

year’s ICLR introduces a new way of

approximating the attention computation without materializing the quadratic