LSTM Model Architecture for Rare Event Time Series Forecasting

Last Updated on August 5, 2019

Time series forecasting with LSTMs directly has shown little success.

This is surprising as neural networks are known to be able to learn complex non-linear relationships and the LSTM is perhaps the most successful type of recurrent neural network that is capable of directly supporting multivariate sequence prediction problems.

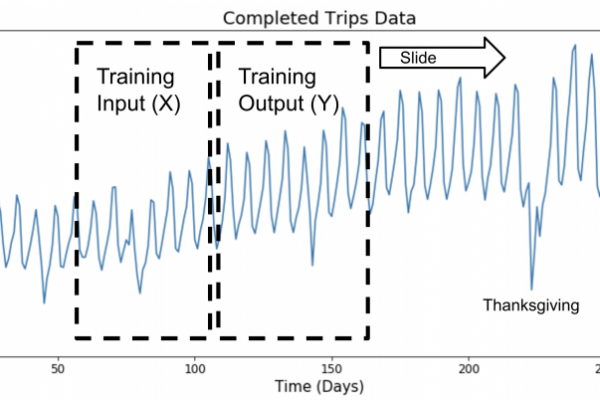

A recent study performed at Uber AI Labs demonstrates how both the automatic feature learning capabilities of LSTMs and their ability to handle input sequences can be harnessed in an end-to-end model that can be used for drive demand forecasting for rare events like public holidays.

In this post, you will discover an approach to developing a scalable end-to-end LSTM model for time series forecasting.

After reading this post, you will know:

- The challenge of multivariate, multi-step forecasting across multiple sites, in this case cities.

- An LSTM model architecture for time series forecasting comprised of separate autoencoder and forecasting sub-models.

- The skill of the proposed LSTM architecture at rare event demand forecasting and the ability to reuse the trained model on unrelated forecasting problems.

Kick-start your project with my new book Deep Learning for Time Series Forecasting, including step-by-step tutorials and

To finish reading, please visit source site