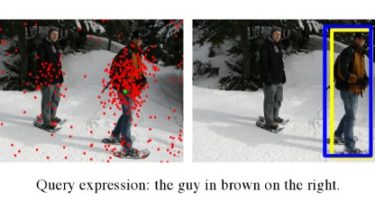

Look before you leap: learning landmark features for one-stage visual grounding

LBYL-Net

This repo implements paper Look Before You Leap: Learning Landmark Features For One-Stage Visual Grounding CVPR 2021.

Getting Started

Prerequisites

- python 3.7

- pytorch 10.0

- cuda 10.0

- gcc 4.92 or above

Installation

-

Then clone the repo and install dependencies.

git clone https://github.com/svip-lab/LBYLNet.git cd LBYLNet pip install requirements.txt -

You also need to install our landmark feature convolution:

cd ext git clone https://github.com/hbb1/landmarkconv.git cd landmarkconv/lib/layers python setup.py install --user -

We follow dataset structure DMS and FAOA. For convience, we have pack them togather, including ReferitGame, RefCOCO, RefCOCO+, RefCOCOg.

bash data/refer/download_data.sh ./data/refer -

download the generated index files and place them in

./data/refer. Available at [Gdrive], [One Drive] . -