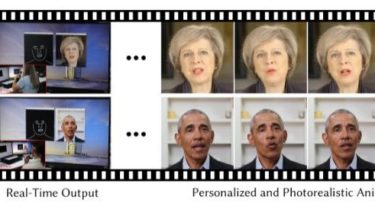

Live Speech Portraits: Real-Time Photorealistic Talking-Head Animation

This repository contains the implementation of the following paper:

Live Speech Portraits: Real-Time Photorealistic Talking-Head Animation

Yuanxun Lu, Jinxiang Chai, Xun Cao (SIGGRAPH Asia 2021)

Abstract: To the best of our knowledge, we first present a live system that generates personalized photorealistic talking-head animation only driven by audio signals at over 30 fps. Our system contains three stages. The first stage is a deep neural network that extracts deep audio features along with a manifold projection to project the features to the target person’s speech space. In the second stage, we learn facial dynamics and motions from the projected audio features. The predicted motions include head poses and upper body motions, where the former is generated by an autoregressive probabilistic model which models