How to Fix the Vanishing Gradients Problem Using the ReLU

Last Updated on August 25, 2020

The vanishing gradients problem is one example of unstable behavior that you may encounter when training a deep neural network.

It describes the situation where a deep multilayer feed-forward network or a recurrent neural network is unable to propagate useful gradient information from the output end of the model back to the layers near the input end of the model.

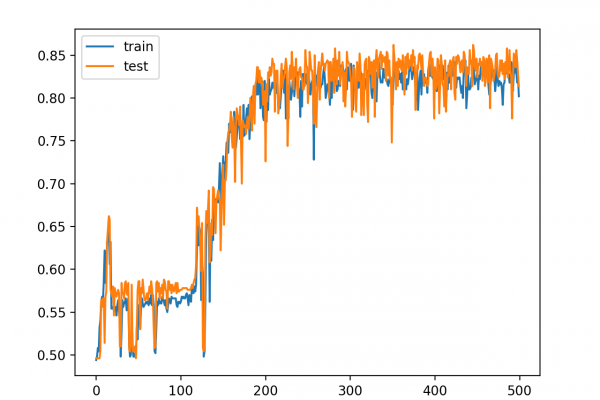

The result is the general inability of models with many layers to learn on a given dataset, or for models with many layers to prematurely converge to a poor solution.

Many fixes and workarounds have been proposed and investigated, such as alternate weight initialization schemes, unsupervised pre-training, layer-wise training, and variations on gradient descent. Perhaps the most common change is the use of the rectified linear activation function that has become the new default, instead of the hyperbolic tangent activation function that was the default through the late 1990s and 2000s.

In this tutorial, you will discover how to diagnose a vanishing gradient problem when training a neural network model and how to fix it using an alternate activation function and weight initialization scheme.

After completing this tutorial, you will

To finish reading, please visit source site