How to Develop an Ensemble of Deep Learning Models in Keras

Last Updated on August 28, 2020

Deep learning neural network models are highly flexible nonlinear algorithms capable of learning a near infinite number of mapping functions.

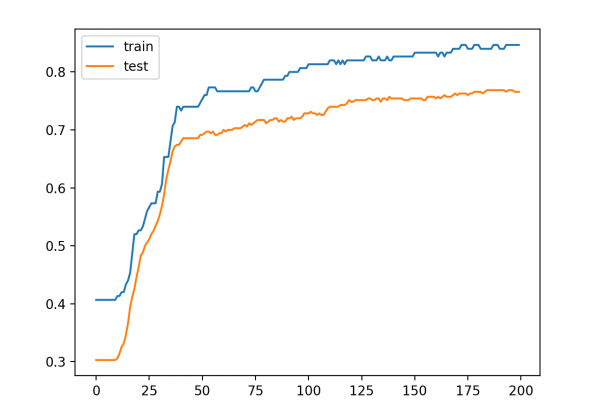

A frustration with this flexibility is the high variance in a final model. The same neural network model trained on the same dataset may find one of many different possible “good enough” solutions each time it is run.

Model averaging is an ensemble learning technique that reduces the variance in a final neural network model, sacrificing spread in the performance of the model for a confidence in what performance to expect from the model.

In this tutorial, you will discover how to develop a model averaging ensemble in Keras to reduce the variance in a final model.

After completing this tutorial, you will know:

- Model averaging is an ensemble learning technique that can be used to reduce the expected variance of deep learning neural network models.

- How to implement model averaging in Keras for classification and regression predictive modeling problems.

- How to work through a multi-class classification problem and use model averaging to reduce the variance of the final model.

Kick-start your project with my new book Better Deep Learning,

To finish reading, please visit source site