How to Develop a Pix2Pix GAN for Image-to-Image Translation

Last Updated on September 1, 2020

The Pix2Pix Generative Adversarial Network, or GAN, is an approach to training a deep convolutional neural network for image-to-image translation tasks.

The careful configuration of architecture as a type of image-conditional GAN allows for both the generation of large images compared to prior GAN models (e.g. such as 256×256 pixels) and the capability of performing well on a variety of different image-to-image translation tasks.

In this tutorial, you will discover how to develop a Pix2Pix generative adversarial network for image-to-image translation.

After completing this tutorial, you will know:

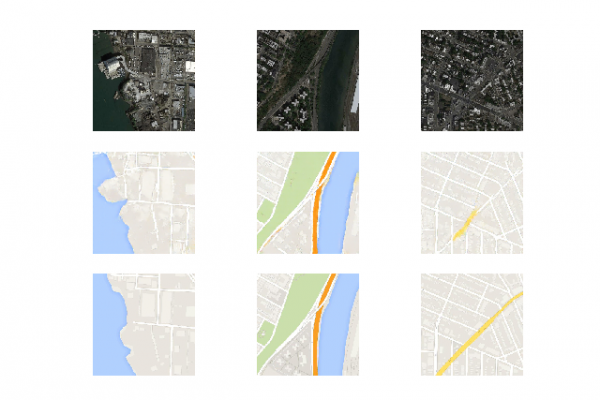

- How to load and prepare the satellite image to Google maps image-to-image translation dataset.

- How to develop a Pix2Pix model for translating satellite photographs to Google map images.

- How to use the final Pix2Pix generator model to translate ad hoc satellite images.

Kick-start your project with my new book Generative Adversarial Networks with Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.