How to Develop a Horizontal Voting Deep Learning Ensemble to Reduce Variance

Last Updated on August 25, 2020

Predictive modeling problems where the training dataset is small relative to the number of unlabeled examples are challenging.

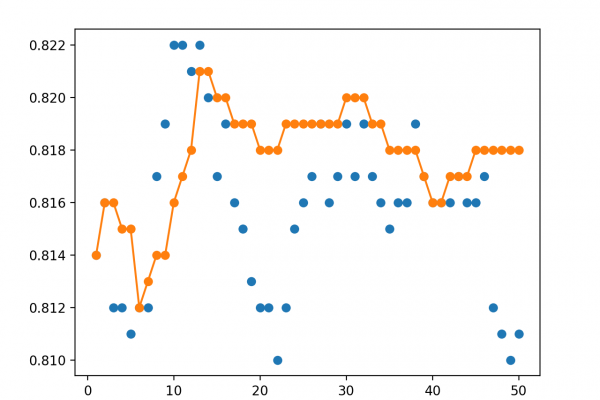

Neural networks can perform well on these types of problems, although they can suffer from high variance in model performance as measured on a training or hold-out validation datasets. This makes choosing which model to use as the final model risky, as there is no clear signal as to which model is better than another toward the end of the training run.

The horizontal voting ensemble is a simple method to address this issue, where a collection of models saved over contiguous training epochs towards the end of a training run are saved and used as an ensemble that results in more stable and better performance on average than randomly choosing a single final model.

In this tutorial, you will discover how to reduce the variance of a final deep learning neural network model using a horizontal voting ensemble.

After completing this tutorial, you will know:

- That it is challenging to choose a final neural network model that has high variance on a training dataset.

- Horizontal voting ensembles provide a way to

To finish reading, please visit source site