How to Develop a Gradient Boosting Machine Ensemble in Python

Last Updated on September 7, 2020

The Gradient Boosting Machine is a powerful ensemble machine learning algorithm that uses decision trees.

Boosting is a general ensemble technique that involves sequentially adding models to the ensemble where subsequent models correct the performance of prior models. AdaBoost was the first algorithm to deliver on the promise of boosting.

Gradient boosting is a generalization of AdaBoosting, improving the performance of the approach and introducing ideas from bootstrap aggregation to further improve the models, such as randomly sampling the samples and features when fitting ensemble members.

Gradient boosting performs well, if not the best, on a wide range of tabular datasets, and versions of the algorithm like XGBoost and LightBoost often play an important role in winning machine learning competitions.

In this tutorial, you will discover how to develop Gradient Boosting ensembles for classification and regression.

After completing this tutorial, you will know:

- Gradient Boosting ensemble is an ensemble created from decision trees added sequentially to the model.

- How to use the Gradient Boosting ensemble for classification and regression with scikit-learn.

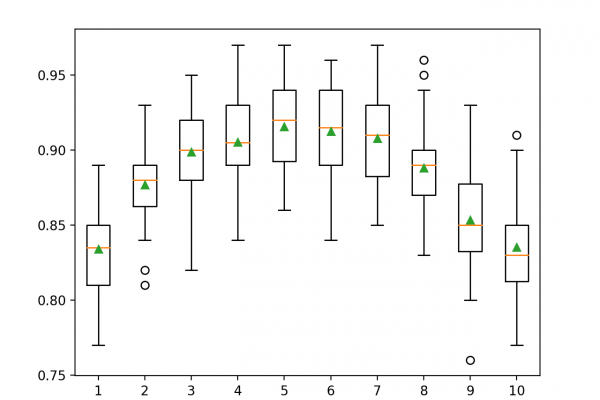

- How to explore the effect of Gradient Boosting model hyperparameters on model performance.

Let’s get started.

- Update Aug/2020: Added

To finish reading, please visit source site