How to Create a Bagging Ensemble of Deep Learning Models in Keras

Last Updated on August 25, 2020

Ensemble learning are methods that combine the predictions from multiple models.

It is important in ensemble learning that the models that comprise the ensemble are good, making different prediction errors. Predictions that are good in different ways can result in a prediction that is both more stable and often better than the predictions of any individual member model.

One way to achieve differences between models is to train each model on a different subset of the available training data. Models are trained on different subsets of the training data naturally through the use of resampling methods such as cross-validation and the bootstrap, designed to estimate the average performance of the model generally on unseen data. The models used in this estimation process can be combined in what is referred to as a resampling-based ensemble, such as a cross-validation ensemble or a bootstrap aggregation (or bagging) ensemble.

In this tutorial, you will discover how to develop a suite of different resampling-based ensembles for deep learning neural network models.

After completing this tutorial, you will know:

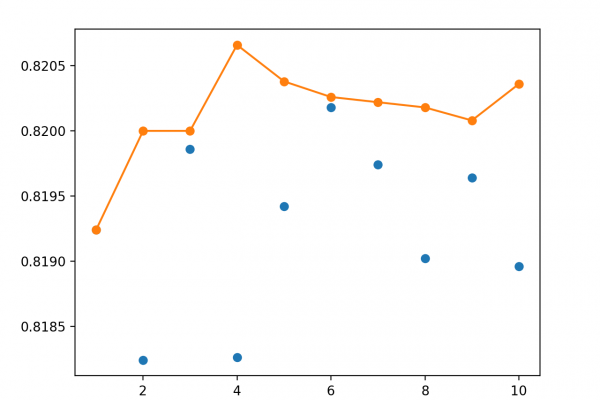

- How to estimate model performance using random-splits and develop an ensemble from the models.

- How to estimate

To finish reading, please visit source site