How to Configure XGBoost for Imbalanced Classification

Last Updated on August 21, 2020

The XGBoost algorithm is effective for a wide range of regression and classification predictive modeling problems.

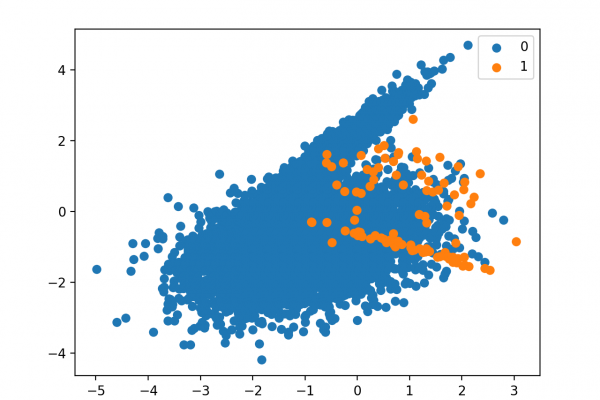

It is an efficient implementation of the stochastic gradient boosting algorithm and offers a range of hyperparameters that give fine-grained control over the model training procedure. Although the algorithm performs well in general, even on imbalanced classification datasets, it offers a way to tune the training algorithm to pay more attention to misclassification of the minority class for datasets with a skewed class distribution.

This modified version of XGBoost is referred to as Class Weighted XGBoost or Cost-Sensitive XGBoost and can offer better performance on binary classification problems with a severe class imbalance.

In this tutorial, you will discover weighted XGBoost for imbalanced classification.

After completing this tutorial, you will know:

- How gradient boosting works from a high level and how to develop an XGBoost model for classification.

- How the XGBoost training algorithm can be modified to weight error gradients proportional to positive class importance during training.

- How to configure the positive class weight for the XGBoost training algorithm and how to grid search different configurations.

Kick-start your project with my new book Imbalanced Classification

To finish reading, please visit source site