How to Configure k-Fold Cross-Validation

Last Updated on August 26, 2020

The k-fold cross-validation procedure is a standard method for estimating the performance of a machine learning algorithm on a dataset.

A common value for k is 10, although how do we know that this configuration is appropriate for our dataset and our algorithms?

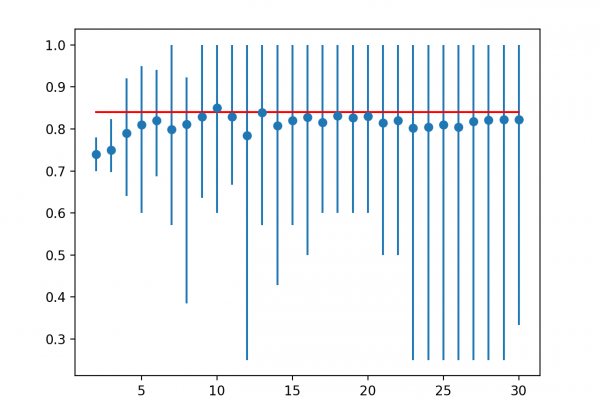

One approach is to explore the effect of different k values on the estimate of model performance and compare this to an ideal test condition. This can help to choose an appropriate value for k.

Once a k-value is chosen, it can be used to evaluate a suite of different algorithms on the dataset and the distribution of results can be compared to an evaluation of the same algorithms using an ideal test condition to see if they are highly correlated or not. If correlated, it confirms the chosen configuration is a robust approximation for the ideal test condition.

In this tutorial, you will discover how to configure and evaluate configurations of k-fold cross-validation.

After completing this tutorial, you will know:

- How to evaluate a machine learning algorithm using k-fold cross-validation on a dataset.

- How to perform a sensitivity analysis of k-values for k-fold cross-validation.

- How to calculate the

To finish reading, please visit source site