How to Calculate Precision, Recall, F1, and More for Deep Learning Models

Last Updated on August 27, 2020

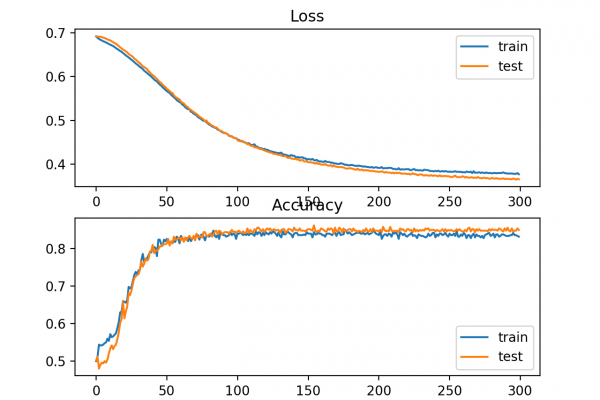

Once you fit a deep learning neural network model, you must evaluate its performance on a test dataset.

This is critical, as the reported performance allows you to both choose between candidate models and to communicate to stakeholders about how good the model is at solving the problem.

The Keras deep learning API model is very limited in terms of the metrics that you can use to report the model performance.

I am frequently asked questions, such as:

How can I calculate the precision and recall for my model?

And:

How can I calculate the F1-score or confusion matrix for my model?

In this tutorial, you will discover how to calculate metrics to evaluate your deep learning neural network model with a step-by-step example.

After completing this tutorial, you will know:

- How to use the scikit-learn metrics API to evaluate a deep learning model.

- How to make both class and probability predictions with a final model required by the scikit-learn API.

- How to calculate precision, recall, F1-score, ROC AUC, and more with the scikit-learn API for a model.

Kick-start your project with my new book Deep Learning With Python,

To finish reading, please visit source site