How to Automatically Generate Textual Descriptions for Photographs with Deep Learning

Last Updated on August 7, 2019

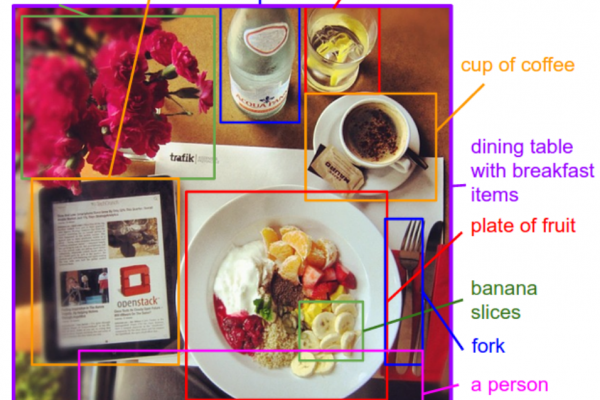

Captioning an image involves generating a human readable textual description given an image, such as a photograph.

It is an easy problem for a human, but very challenging for a machine as it involves both understanding the content of an image and how to translate this understanding into natural language.

Recently, deep learning methods have displaced classical methods and are achieving state-of-the-art results for the problem of automatically generating descriptions, called “captions,” for images.

In this post, you will discover how deep neural network models can be used to automatically generate descriptions for images, such as photographs.

After completing this post, you will know:

- About the challenge of generating textual descriptions for images and the need to combine breakthroughs from computer vision and natural language processing.

- About the elements that comprise a neural feature captioning model, namely the feature extractor and language model.

- How the elements of the model can be arranged into an Encoder-Decoder, possibly with the use of an attention mechanism.

Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.