Ensemble Neural Network Model Weights in Keras (Polyak Averaging)

Last Updated on August 28, 2020

The training process of neural networks is a challenging optimization process that can often fail to converge.

This can mean that the model at the end of training may not be a stable or best-performing set of weights to use as a final model.

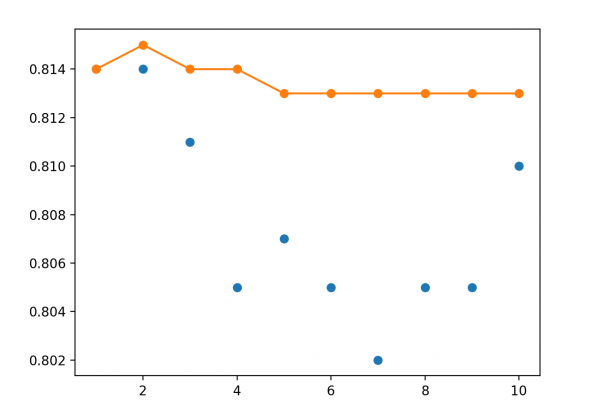

One approach to address this problem is to use an average of the weights from multiple models seen toward the end of the training run. This is called Polyak-Ruppert averaging and can be further improved by using a linearly or exponentially decreasing weighted average of the model weights. In addition to resulting in a more stable model, the performance of the averaged model weights can also result in better performance.

In this tutorial, you will discover how to combine the weights from multiple different models into a single model for making predictions.

After completing this tutorial, you will know:

- The stochastic and challenging nature of training neural networks can mean that the optimization process does not converge.

- Creating a model with the average of the weights from models observed towards the end of a training run can result in a more stable and sometimes better-performing solution.

- How to

To finish reading, please visit source site